CLIPort

Two-Stream Architecture

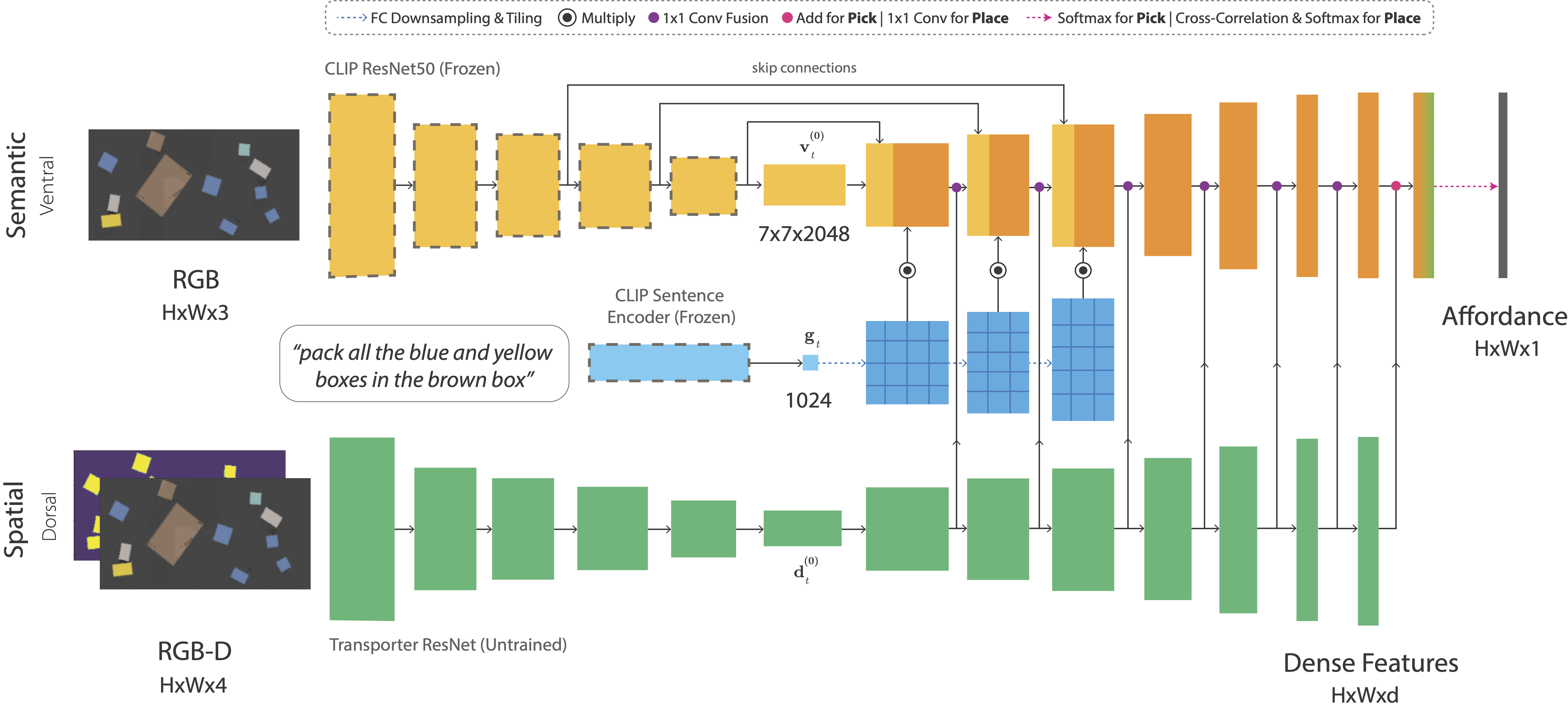

Broadly inspired by (or vaguely analogous to) the two-stream hypothesis in cognitive psychology, we present a two-stream architecture for vision-based manipulation with semantic and spatial pathways. The semantic stream uses a pre-trained CLIP model to encode RGB and language-goal input. Since CLIP is trained with large amounts of image-caption pairs from the internet, it acts as a powerful semantic prior for grounding visual concepts like colors, shapes, parts, texts, and object categories. The spatial stream is a tabula rasa fully-convolutional network that encodes RGB-D input.

Paradigm 1: Unlike existing object detectors, CLIP is not limited to a predefined set of object classes. And unlike other vision-language models, it's not restricted by a top-down pipeline that detects objects with bounding boxes or instance segmentations. This allows us to forgo the traditional paradigm of training explicit detectors for cloths, pliers, chessboard squares, cherry stems, and other arbitrary things.

TransporterNets

We use this two-stream architecture in all three networks of TransporterNets to predict pick and place affordances at each timestep. TransporterNets first attends to a local region to decide where to pick, then computes a placement location by finding the best match for the picked region through cross-correlation of deep visual features. This structure serves as a powerful inductive bias for learning roto-translationally equivariant representations in tabletop environments.

Credit: Zeng et. al (Google)

Paradigm 2: TransporterNets takes an action-centric approach to perception where the objective is to detect actions rather than detect objects and then learn a policy. Keeping the action-space grounded in the perceptual input allows us to exploit geometric symmetries for efficient representation learning. When combined with CLIP's pre-trained representations, this enables the learning of reusable manipulation skills without any "objectness" assumptions.

Results

Single-Task Models

Trained withOne Multi-Task Model

Trained withAffordance Predictions

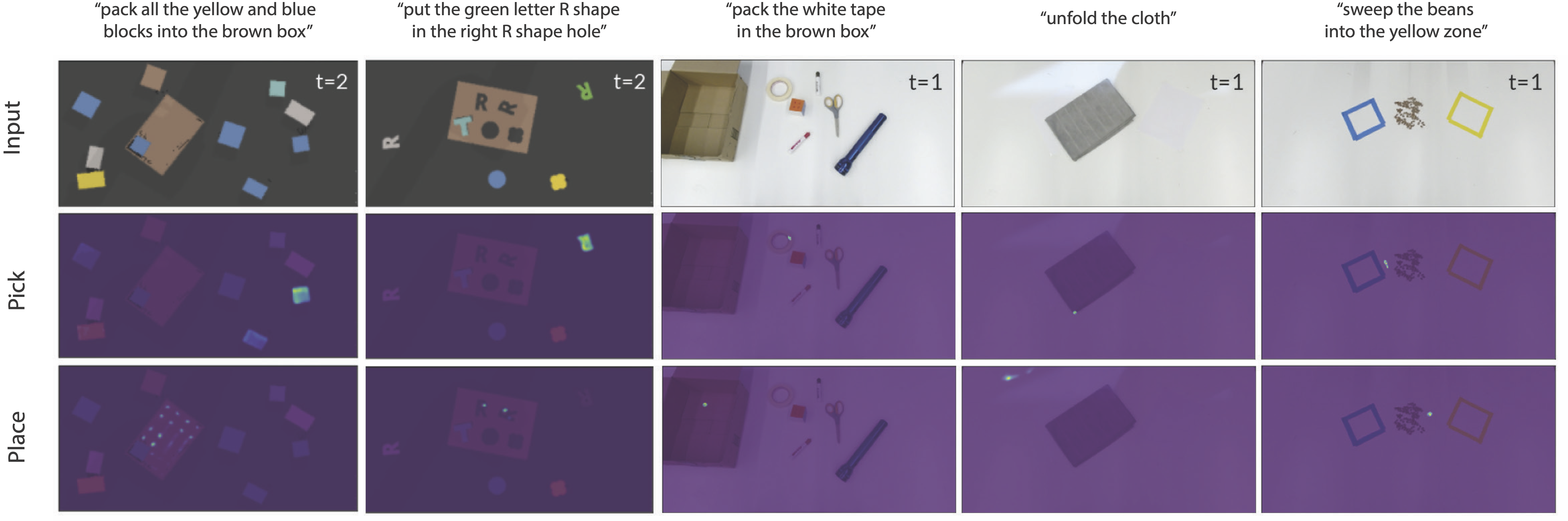

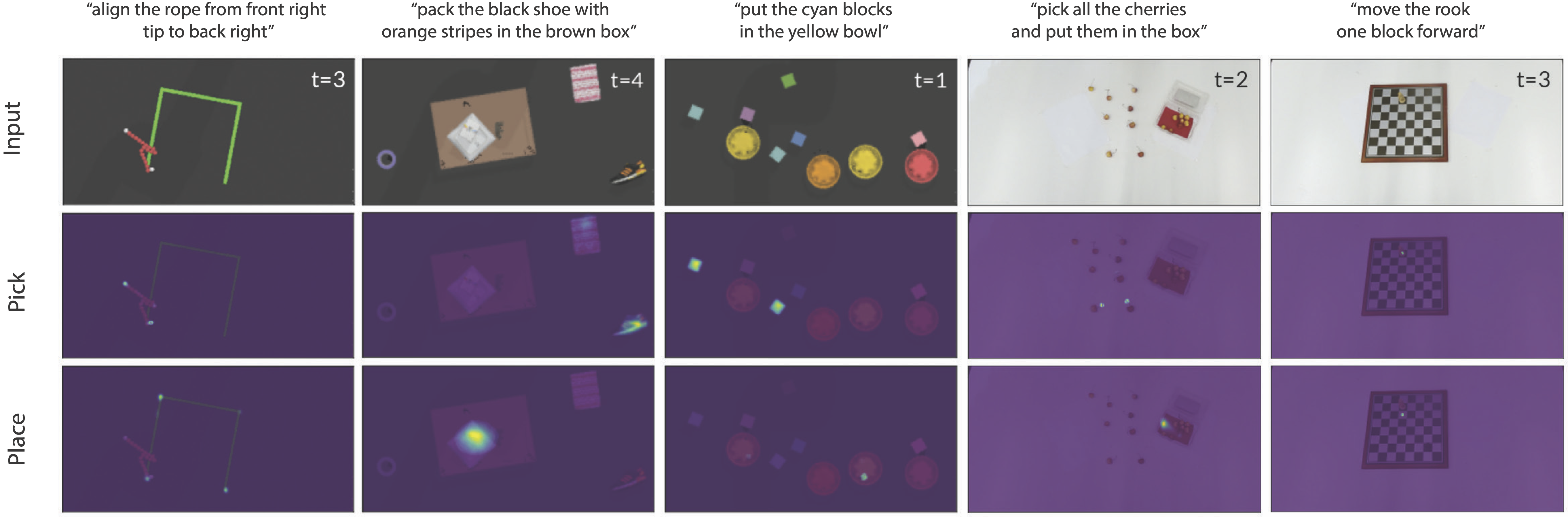

Examples of pick and place affordance predictions from multi-task CLIPort models:

BibTeX

@inproceedings{shridhar2021cliport,

title = {CLIPort: What and Where Pathways for Robotic Manipulation},

author = {Shridhar, Mohit and Manuelli, Lucas and Fox, Dieter},

booktitle = {Proceedings of the 5th Conference on Robot Learning (CoRL)},

year = {2021},

}